Upgrading your data center network to 100 Gigabit Ethernet (100G) is no longer a luxury but a necessity for many modern enterprises. As discussed in our previous post

“When to Upgrade to 100G“, the relentless growth of data traffic, fueled by cloud computing, video streaming, real-time applications, and particularly the surge in AI/ML workloads, is rapidly outstripping the capabilities of traditional 10G and 40G networks.

This post serves as your practical guide, breaking down the essential components and considerations for how to build a 100G data center. We’ll delve into architecture, cabling strategies, and crucial equipment selection, providing actionable insights for network engineers, IT managers, and enterprise buyers preparing for this significant upgrade.

Understanding 100G Data Center Architecture

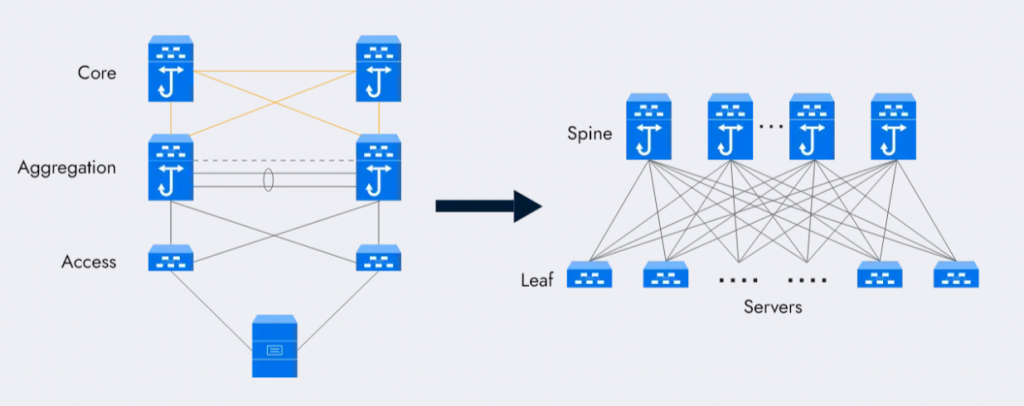

The foundational design of your data center network is paramount for achieving optimal 100G performance. While older three-tier architectures (Core-Aggregation-Access) once dominated, the demands of modern high-bandwidth, low-latency applications have driven an evolution towards flatter, more efficient topologies.

The Power of Spine-Leaf Topology

The Spine-Leaf architecture has emerged as the de facto standard for 100G data centers due to its ability to deliver ultra-low latency, non-blocking throughput, and linear scalability. This design offers numerous advantages over traditional models by ensuring any-to-any connectivity, reducing congestion, and significantly improving network resilience.

- Leaf Switches: These are the access layer switches, deployed at the top of each rack (ToR), directly connecting to servers and storage units. In a 100G environment, leaf switches typically provide 25G or 50G downstream ports to servers, with 100G uplinks to the spine layer. For example, the Asterfusion CX308P-N is a leaf (ToR) switch with 48x25G downlink ports and 8x100G QSFP28 uplinks.

- Spine Switches: Forming the backbone of the 100G network, spine switches interconnect all leaf switches. Each leaf switch connects to every spine switch, creating multiple active paths for data flow. This redundancy ensures that if one path fails, data can simply take another, enhancing resilience and reducing bottlenecks.

Choosing the Right Architectural Layer Chipsets

The performance heart of your 100G switches lies in their Application-Specific Integrated Circuits (ASICs) and CPU. Different ASICs are optimized for distinct use cases:

- Marvell Teralynx 7: Ideal for ultra-low-latency, high-performance environments like AI training and inference clusters, High-Frequency Trading (HFT) systems, and financial backbones. It offers latency as low as 400-520 nanoseconds (e.g., Asterfusion CX564P-N at 400ns, CX532P-N at 520ns), massive packet throughput up to 6300 Mpps, and a large 70MB packet buffer for superior congestion tolerance in lossless Ethernet scenarios like RoCEv2. This ASIC suits spine or core layers in latency-sensitive data center fabrics.

- Marvell Falcon (Prestera CX 8500): Optimized for scalable, cloud-driven networks and large-scale, multi-tenant virtualized data center environments. Falcon-based switches offer high routing table capacities (e.g., 288K IPv4 and 144K IPv6 routes) and 128K MAC address entries, crucial for managing thousands of VMs, containers, and overlay networks (VXLAN EVPN). Suitable for spine/leaf layers or edge routers, as well as ToR or leaf switch roles.

Cabling Your 100G Data Center

The transition to 100G requires a robust cabling infrastructure, primarily relying on fiber optics.

1. Fiber Optic Foundations: SMF vs. MMF

Fiber optic cables form the backbone of 100G networks, carrying more data over longer distances than copper.

- Multi-Mode Fiber (MMF): Used for shorter distances (up to 150-300m) within data centers and LANs. MMF transmits multiple light modes, offering high bandwidth but more dispersion over long distances.

- Single-Mode Fiber (SMF): Uses a single light mode, ideal for long-distance transmission (up to 40 km) with minimal signal loss.

2. Fiber Types and Cabling Recommendations

| Fiber Type | Max Distance | Connector Type | Typical Use Case |

|---|---|---|---|

| MMF (OM3/OM4/OM5) | 150-300m | LC, MPO-12/24 | Short intra-data center links |

| SMF (OS1/OS2) | Up to 40 km | LC, MPO-12/24 | Long-haul and inter-data center |

| DAC | Up to 7m | Integrated | Short rack/cabinet connections |

| AOC | Up to 100m+ | Integrated | High-performance, low power |

3. 100G Transmission Modes and Connectors

The QSFP28 transceiver form factor is the preferred choice for efficient and powerful 100G connectivity. QSFP28 ports offer high density and support speeds including 100GbE, 50GbE, and 25GbE. Its breakout capability allows splitting a single 100G port into four 25G or four 10G connections, maximizing port utilization.

Different 100G Ethernet standards dictate cabling requirements:

- 100GBASE-SR4: 4 lanes of 25G using MTP/MPO-12 cabling.

- 100GBASE-SR10: 10 lanes of 10G using MTP/MPO-24 cabling.

- Fiber types OM3, OM4, and OM5 support various rates and distances accordingly.

4. The Role of DAC and AOC Cables

- AOCs combine optical fiber, transceivers, and control chips to convert electrical signals to optical with higher performance and lower power consumption. They are lightweight and immune to electromagnetic interference.

- DACs are cost-effective for short-distance connections, particularly for 10G, 25G, and 40G links.

AddOn Networks and Asterfusion provide a wide range of 100G optical modules and cables, including short-reach, long-reach, and energy-efficient (“green”) options with lifetime warranties and broad vendor compatibility.

Selecting Your 100G Equipment

Key considerations when choosing 100G switches include:

- Performance: ASICs and CPU

High throughput and ultra-low latency ASICs like Marvell Teralynx 7 or Falcon ensure the switch can handle demanding AI, HPC, or cloud workloads. The CPU must manage control plane tasks efficiently.

- Port Flexibility: The QSFP28 Advantage

QSFP28 supports high port density, multi-speed connections (100G, 50G, 25G), and breakout capability, maximizing space and investment. For instance, Asterfusion CX532P-N features 32x100G QSFP28 ports, and CX564P-N has 64x100G ports.

- Power Efficiency and Cooling

Look for hot-swappable power supplies and fans, advanced cooling like Power to Connector (P2C) airflow, and “green” transceivers that cut power consumption by ~30%.

- Network Operating System (NOS)

Open NOS like SONiC and Cumulus Linux provide flexibility, automation, and feature-rich environments. Asterfusion switches come pre-installed with enterprise-grade SONiC NOS, supporting advanced features like VXLAN, BGP EVPN, RoCEv2, and automation APIs. NVIDIA Mellanox integrates tightly with Cumulus Linux for programmable networks. MikroTik uses RouterOS, known for customization but with a learning curve.

Choosing 100G Transceivers

QSFP28 transceivers convert electrical to optical signals for fiber links. Choose transceivers based on reach, fiber type, and power needs. AddOn Networks offers diverse 100G transceivers (short reach, green, long reach) with lifetime warranty, and Asterfusion supports various optics and cabling.

Leading 100G Switch and Equipment Vendors

| Vendor | Strengths | Considerations |

|---|---|---|

| Cisco | Market leader, security, AI features | Higher cost, complexity |

| Arista | Ultra-low latency, cloud-native | Premium pricing |

| Juniper | Security, AI automation | Smaller ecosystem |

| HPE | Energy efficient, P2C airflow | Fewer ultra-low latency models |

| Asterfusion | Cost-effective, ultra-low latency | Newer brand |

| NVIDIA Mellanox | Automation, HPC focus | Cloud/HPC focused |

| MikroTik | Customizable, affordable | Steeper learning curve |

Migrating from 10G/40G to 100G

Upgrading can be complex, but with proper planning, 100G offers simplified architecture and scalability.

1. Seamless Integration and Scalability

100G ports can replace multiple 10G or 40G links, reducing devices and complexity. Breakout ports and backward compatibility enable gradual migration. Existing fibers are often reusable, minimizing costly rip-and-replace efforts.

2. Harnessing RoCE for Lossless Performance

Remote Direct Memory Access over Converged Ethernet (RoCEv2) provides lossless Ethernet by offloading CPU and managing congestion, lowering latency and jitter—critical for storage, AI, and HPC clusters.

Key features include:

- Priority Flow Control (PFC)

- Explicit Congestion Notification (ECN)

- Enhanced Transmission Selection (ETS)

- Data Center Bridging Exchange (DCBX)

Asterfusion CX-N switches support RoCEv2 with latency outperforming some InfiniBand switches.

Real-World Scenarios and Benefits

- Powering AI, HPC, and Financial Workloads

100G with ultra-low latency ASICs and RoCEv2 meets demands of AI training clusters, HPC, and high-frequency trading data centers requiring minimal latency and maximum throughput.

- Enhancing East-West Traffic and Cloud Scalability

Spine-leaf topology handles heavy server-to-server (east-west) traffic efficiently. Marvell Falcon switches with large routing tables and MAC capacities support multi-tenant cloud environments and overlay networks.

- Addressing Practical File Transfer Needs

Local file servers with 100G significantly speed transfers and backups, solving sync issues and improving productivity.

Overcoming Challenges in 100G Deployment

- Initial Investment and Long-Term Value

High upfront costs for switches, transceivers, and infrastructure are offset by scalability, reduced downtime, and operational savings.

- Power Management and Operational Complexity

Select energy-efficient gear and advanced cooling (e.g., P2C airflow, cold batteries). Open NOS like SONiC simplifies management and monitoring, easing operational burden.

FAQ

Q1: What is the main benefit of Spine-Leaf architecture in a 100G data center?

A: Non-blocking, low-latency connectivity with linear scalability and improved resilience.

Q2: Can I reuse existing fiber cabling when upgrading to 100G?

A: Often yes, if cabling supports required fiber types and connectors.

Q3: How does RoCEv2 improve 100G network performance?

A: By enabling lossless Ethernet, reducing latency and CPU load through congestion management.

Q4: How much does it cost to build a small data center?

The cost to build a small data center varies widely depending on location, design, and capacity. Generally:

- Construction costs range from $600 to $1,000 per square foot.

- Per MW IT load, the cost ranges from $7 million to $12 million.

- A 1,000 sq ft facility may cost ~$1 million, while a small edge or micro data center may cost around $500,000 to $2 million depending on redundancy and connectivity needs.

Q5: How much does a 100 MW data center cost?

A 100 MW hyperscale data center typically costs between $700 million and $1.2 billion, based on a standard estimate of $7 million to $12 million per MW of IT load. Power capacity is a key constraint for data centers, and large-scale facilities often include advanced power distribution, cooling, and network infrastructure to handle massive workloads efficiently.

Conclusion

Building a 100G data center is a strategic investment that future-proofs your infrastructure. By carefully planning your network architecture with Spine-Leaf design, selecting appropriate cabling and high-performance equipment, and leveraging technologies like RoCE for lossless data transfer, you can unlock significant gains in speed, scalability, and efficiency.

The transition from 10G/40G can be smooth with thoughtful migration strategies and flexible hardware. While challenges exist, the benefits for AI, HPC, cloud, and enterprise workloads are undeniable.

Ready to accelerate your 100G deployment? Explore practical solutions, architecture designs, and transceiver options on our dedicated 100G data center page.

Expertise Builds Trust

20+ Years • 200+ Countries • 21500+ Customers/Projects

CCIE · JNCIE · NSE7 · ACDX · HPE Master ASE · Dell Server/AI Expert