How to migrate from a Catalyst-based data center to a Nexus-based data center? Are you still confused with layer 2 and Layer 3? Maybe the following document written by Jeremy Filliben can help you become a network expert.

Migrating from Catalyst to Nexus —By Jeremy Filliben

There are already a couple great resources on the Internet for network engineers who are migrating from a Catalyst-based data center to a Nexus-based data center. Two of my favorites are Cisco docwiki.cisco.com site, which hosts a bunch of IOS –> NX-OS comparison pages, and a page put together by Carole Warner Reece of Chesapeake Netcraftsmen. Both of these resources helped me quite a bit in working out the syntax of NX-OS commands. This blog post is an attempt to supplement that base of knowledge with my own experiences in converting a production data center over to the NX-OS platform.

My Catalyst network was a fairly standard data center design, with a pair of 6509s in the core and multiple Top-of-Rack switches cascaded below. We used RAPID-PVST, with blocking occurring in the middle of a TOR stack.

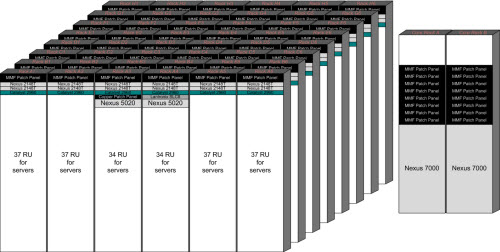

The new Nexus environment looks pretty much the same. We have a pair of Nexus 7010s in the core with a layer of Nexus 5020 switches at the edge. Each 5020 supports 4 – 6 FEXs. The FEXs only uplink to a single 5020 switch. This new network was built alongside the existing Catalyst one. The plan was to interconnect the two environments at the cores (at layer 2) and migrate server ports over whenever possible. Once we reached a critical mass of devices on the Nexus side of the network we planned to move the Layer 3 functionality from the IOS environment to the NX-OS side.

A few months ago we finished building out the Nexus LAN and interconnected it to the Catalyst LAN. We used vPC on the Nexus side to reduce the amount of SPT blocking. All was well, and we began migrating servers to the Nexus infrastructure without any issues. Eventually we reached our pre-determined “critical mass” and scheduled a change window to migrate the SVIs to the Nexus side and reconfigure the core Catalyst 6509s as Layer-2 only devices. The configuration work for this migration was around 1500 lines, so it was not by any means trivial, but it was also quite repetitive due to the number of SVIs and size of the third party static routing tables. Here’s where the fun began.

The first issue we ran into was with an extranet BGP peering connection through a firewall. In our design, we connect various third parties to an aggregation router in a DMZ. The routes for these third parties are advertised to our internal network via BGP, through a statically-routed firewall. Most of our third party connections also utilize BGP, so we receive a variety of BGP AS numbers. In two cases, the BGP AS number chosen by the third party overlaps with one of our internal AS numbers. To rectify this, we enabled the “allowas-in” knob on the internal BGP peering routers. Unfortunately this knob will not be available on the Nexus platform until NX-OS 5.1. I should have caught this in my pre-implementation planning. This was fixed with a small set of static routes. Our medium-term plan is to work with the two third parties to change their AS numbers, and eventually we will implement “allowas-in”, once we upgrade to NX-OS 5.1. Another interesting thing to note about BGP on NX-OS is that the routers check the AS-path for loops for both eBGP and iBGP neighbors. IOS does not do any loop-checking on iBGP advertisements.

With that behind us, we moved on to the SVIs and migrating our spanning-tree root to the Nexus switches. The SVI migration was trivial, but the SPT root migration caused issues. We were bitten by the behavior of Bridge Assurance, a default feature in NX-OS that was unavailable in our version of IOS (SXF train). Surprisingly, the lack of Bridge Assurance support didn’t prevent the Catalyst<->Nexus interconnect from working while the SPT root was on the Catalyst side of the network, but once we moved the root to the Nexus side, Bridge Assurance shut down the interconnects. The only acceptable solution to this issue (that I could find) was to disable Bridge Assurance globally on the Nexus switches. My error here is that I took for granted that my interconnect was properly configured, because it had been working for several months.

After encountering this issue I took another look at Terry Slattery’s blog post on Bridge Assurance, and at Cisco’s Understanding Bridge Assurance IOS Configuration Guide. The problem I experienced is Bridge Assurance requires switches to send BPDUs upstream (towards the root), while normal RSTP behavior is to suppress the sending of BPDUs towards the root. When the Catalyst side of the network contained the root, the Catalyst switches sent BPDUs downstream to the Nexus switches (normal RSTP behavior) and the Nexus switches sent BPDUs upstream to the Catalysts, which is abnormal for RSTP, but harmless. The Catalyst switches simply discarded the BPDUs. Once the SPT root was migrated, the Nexus switches sent BPDUs to the Catalyst (normal), and the Catalysts suppressed all BPDUs towards the Nexus switches (normal for RSTP, but not correct for Bridge Assurance). For the first few seconds, this was not a problem and forwarding worked fine, but eventually the Bridge Assurance timeout was reached and the Nexus switches put the ports into BA-Inconsistent state. The “right” way to solve this issue is to upgrade the Catalyst switches to SXI IOS and re-enabled Bridge Assurance. My preference is to simply retire the 6509s, so I’ll have to keep tabs on the migration effort. If it looks like it will drag on for a while, I’ll schedule the upgrades.

(Edit on 8/9/2010 – commenter “wojciechowskipiotr” noted that configuring the port-channels towards the 6500s with “spanning-tree port type normal” would also disable Bridge Assurance, but only for those specific ports. If I get an opportunity to try this configuration, I will report on whether it is successful.)

The remaining issues I faced were minor, but in some cases are still lingering or just annoyed me:

1) Static routes with “name” tags are unavailable. I had gotten into the habit of adding a named static routes to the network, especially for third-party routing. It appears that NX-OS does not support this.

2) VTP is unavailable. Based on conversations with other networkers, I’m probably the last living fan of VTP. I am sad to see it go. Fortunately in the Nexus environment there are fewer places to add VLANs (only the 5ks and 7ks).

3) Some of the LAN port defaults are different (when compared to IOS). For example, QoS trust is enabled by default. Also, “shutdown” is the default state for all ports. If a port is active, it’ll have a “no shutdown” on the config.

4) OSPF default reference bandwidth is now 40gb, rather than the 100mb value in IOS. This is a good thing, since 100mb is woefully low in today’s networks.

5) Proxy-arp is disabled by default. Our migration uncovered a few misconfigured hosts. Not a big deal, but it is noteworthy. Proxy-arp can be enabled per SVI, but do you really want to do it?

6) We ran into a WCCP bug in NX-OS 4.2(3). For some reason NX-OS is not load-balancing among our two WAN accelerators. Whichever WAE is activated last becomes the sole WAE that receives packets. I have an active TAC case to find a solution to this issue. For now, we are running through a single WAN accelerator, which reduces the effectiveness of our WAN acceleration solution.

I hope this helps someone with their own migration. This is going to be a common occurrence in our industry for the next few years, especially if Cisco has their way. If anyone has questions, please send me an email or post a comment.

—Original Doc from Migrating from Catalyst to Nexus

Catalyst 6500 Migration to Nexus 7000 (PDF files: Catalyst 6500 Migration to Nexus 7000)

A Catalyst 6500 configuration conversion to a Nexus 7000 will include the following:

Initial Requirements

• A scoping call prior to the scheduling of any event to determine whether the work performed falls within the standard offering or requires additional services.

• Each physical 6500 configuration will count as a single conversion, whether it is replaced by a Nexus 7000 or a VDC within the Nexus 7000.

• All hardware will be assembled and racked prior to engagement.

• Pricing will be based on two standard service conversions per day. Any additional service pricing, if required will be determined.

Standard Services Configuration

NX-OS:

• Version Upgrade to Current Revision if required

• EPLD Version upgrade if required

• Management Interface Configuration

• CMP Configuration

Layer 2 features:

• VLANs

• Private VLANs

• Trunking

• Port-Channels

• VPC

• Spanning-Tree configuration

• Spanning-Tree Enhancements

• Portfast

• BPDU Guard

• Root Guard

• Loop Guard

• UDLD

• IGMP

Layer 3 features:

• IP Configuration

• IGP Routing Protocols

• 2 dynamic routing protocols

• OSPF, EIGRP, ISIS, RIP

• Authentication

• Route Summarization

• OSPF Special Areas

o Static

o IGP-IGP Redistribution

• Route Filtering

• Administrative Distance

• BGP

• Advertise routes into BGP Table using IGP origination

•Default route acceptance only (Prefix –list filter)

• Basic IBGP/EBGP Peering (no filters)

• FHRP

• HSRP, VRRP, GLBP

• Object Tracking

• Timers

• Authentication

Security Features:

• Access-lists

• VACLs

• Port Security

• User Accounts (Network-Admin, Network-Operator roles only)

• CoPP predefined policy (configured from initial setup script)

Additional Service Configurations

All other features requirements will be reviewed prior to the conversion and estimates will be provided. These features include but are not limited to:

• QOS

• BGP Policy

• Prefix-lists

• Route-maps

• AS-Path Filters

• Confederations

• Policy Base Routing

• Multicast

• Advanced Security Features

• Traffic Integrity

• CoPP modifications from default options

• Admission Control

• SNMP Management

• VRF Configuration

• AAA

• OTV

• DCNM

• Best Practice Evaluation

Note: Customer will need provide a current as well as future network diagram; identify IP addressing scheme used along with and non-standard IP protocols used by custom application.

More related Topics: Catalyst to Nexus data center migration